Connect to a Local LLM/Model Running On Your Own Computer for Ultimate Privacy and Security

Connect Ask Steve to Ollama, LM Studio, Jan and more...

You can use Ask Steve for FREE with local models. Read how.

I've had several conversations with very active ChatGPT users recently who mentioned security as a concern, and didn't know that you can run AI/LLM models locally. When you run a model locally, the LLM runs on your own computer. The primary benefit of this is that your requests never leave your machine, so all your data is kept private. The secondary benefit is that it's FREE.

Using local AI models does have drawbacks. First, you need to be able to download and store a large model file (e.g. 3+ GB). Second, you need a reasonably powerful computer to run these models effectively, otherwise they'll be too slow to be of use. Third, the quality of locally-run models typically lags behind cloud-based models - although they are more than good enough for many cases. Finally, despite the local processing, performance can still be slower than cloud alternatives because cloud systems are running in giant server farms.

There are several applications that make using local models easier. Here are the ones I often point people to because they have simple UIs. Some even enable chatting with your own files.

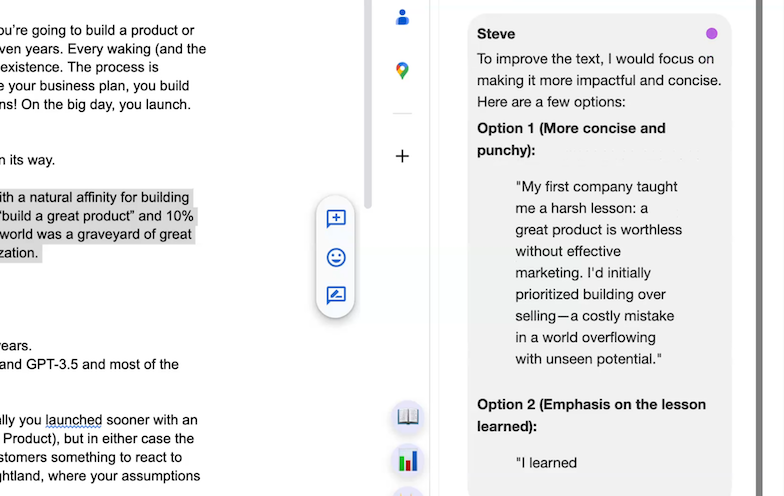

In general if you download one of these, you'll be given an option to pick a model to download from their library. Picking one of the more recent Meta Llama models or Mistral models that is in the 4GB range is usually a safe bet. You could also try DeepSeek R1 to see what all the buzz is about - using one of these tools is a really easy and secure way to do so without having to worry about where your data is going.

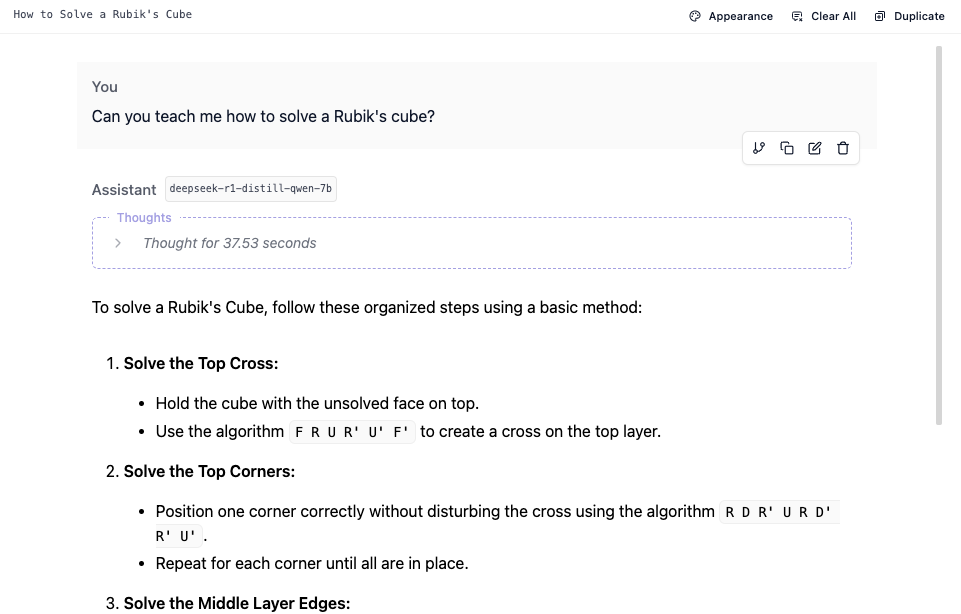

(Picture above is me chatting with DeepSeek R1 in LM Studio running on my MacBook)

-- rajat

You can use Ask Steve for FREE with local models. Read how.